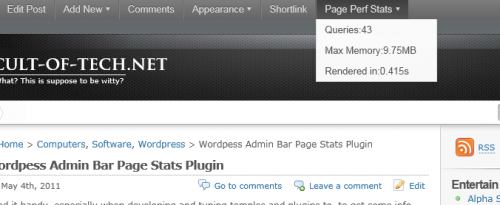

I’m not a big gamer, and when I game, I tend to spend way to much time playing then get totally burned out. Which is likely to be what’s going to happen again, this time with Magicka from Paradox Interactive. Then I’ll scream and cry for a week while my fingers recover.

What is Magicka?

Well in short it’s a fantasy RPG that doesn’t take it self, or the whole genera, to seriously. It starts in a Harry Potteresque castle, with you, the obviously n00b wizard being given a quest by Vlad (who’s not a vampire, or so he insists) to save some kingdom.

Quick now, run along and get to your saving the world party, before the other wizards eat all the cheese.

The voice acting is good bit over the top, in a language best described as being one bork-bork-bork away from Swedish Chef.

The game’s core mechanic is an multi-element casting system that’s almost one to many borks past complicated. You have 8 elements: earth, water, cold, fire, electricity, life, shield, and necromancy. Each type has it’s own “style” of attack, from electricity’s chain lightning, to earth’s hurling of boulders. Some types cancel each other out when combined, others combine into a proton beam of death that would toast even the puffyist Stay Puff man.

With 5 elemental slots in a spell, there’s plenty to play with and learn. Moreover, in many cases strength is increased by using more elements. For example, 1 fire makes a nice little flame thrower, but 5 and you’ve got mount Vesuvius coming out of your staff.

That’s the fun part.

The not so fun part is trying to cast them in combat. Movement is Diablo style point and click. Quite a bit of a departure if you’re use to WASD style games at this point. Elements are conjured with q, w, e, r, a, s, d, and f. Right clicking, casts a directional spell, shift+right click casts an area effect spell, middle-click casts on you, shift-left click swings your sword, but shift-left click with a spell ready casts it on your sword.

Confused yet?

But wait there’s more.

The keys that summon spells can be remapped but not the buttons that cast them. Have a logitech mouse with the middle button set to switch between clicks and freewheeling, better remap that shit or you’ll never be able to heal yourself.

Equipment: thar be dragons here.

Wizards are equipped with a sword and a staff, which is probably why they drown in anything more than ankle deep water. Though there’s no inventory, which makes the whole drowning in more than ankle deep water even more bizarre (must be the velour robes). The real mystery is why the guy in Minecraft, can hold almost 2000 cubic meters of iron can swim without problems but can’t cast a spell to save his life, while a wizard with no inventory what so ever sinks faster than the rock dropped in the lake next to him.

No wizard, is properly equipped without a sword, and Magicka draws on the best of the best. Excalibur makes an early appearance. Though since you’re clearly not King Author, you’ll have to settle for smashing people with the bolder it’s stuck in instead of slicing them to bits, perhaps that’s not a bad tradeoff, actually.

This isn’t a game about loot. Though there’s an inventory key, pressing it brings up a more detailed description of what you’re holding. There’s no money or loot, the spells you learn are listed under your tiny little health bar. Unfortunately, if you haven’t learned the spell in game, you can’t use it, even if you’ve memorized the keystrokes. This is a bit of a bother in the arena where it would be real handy to have haste before you get smashed into oblivion.

The lack of any inventory is actually slightly maddening, when weapons and staffs provide standard RPG style buffs and special abilities, but you can’t actually put them away without swapping them for one on the ground. That awesome staff of healing, that keeps you and your shield healed, while awesome, also heals the guy attacking you and anybody within a good bit of range as well, and you can’t put it away for a while to fight. (Not that you should since clearly you should be acting as a ranged character in this case. Which brings us to another not that you could, since fights become little more than insane free-for-alls.)

The one good thing is that the developers have provided an arena of sorts where you can hone your combat prowess against hoards of angry mobs.

In short, combat boils down to spamming left, right and middle mouse buttons, while flailing on the element keys. Hoping the whole time you don’t light yourself on fire instead of laying a perimeter of landmines in front of the volcano defensive wall. All the while running away like the underpowered pansy you really are when compared to a forest troll or dragon.

Will all the game has going for it–including an appearance by Knights who say Knee like druids summing shrubberies–the game has a huge swath of glitches, bugs, and poor design decisions.

The save system in the Legend of Zelda: Twilight Princess killed the game for me. Not being able to save at any point is quite possibly the single worst design decision that can be made by any developer. And I can’t think of anything worse in terms of lazyness. Ya, Magicka does it that way.

Levels are interspersed with checkpoints where you resume when you die, but otherwise you must complete a whole level in a sitting. Fortunately, levels don’t take very long to complete so it’s not that big of a deal for the time sensitive gamer.

Compounding matters is the apparent lack of any form of enemy scaling, neither in number or difficulty. A single player game appears to have the same number of head-ripping-off trolls that exist in a multiplayer game.

If you watch David “X”‘s game play video on YouTube, he and his friend easily handle Jormungandr. In single player I get pwned in 30 seconds. No ability to receive heals or raises makes for a rather interesting, if one sided, fight.

Judging by my play through so far, I’m going with single player is harder than multiplayer by a large margin. It probably doesn’t help that the controls keep prompting me to light myself on fire.

There’s a point where killing yourself in new and interesting ways is amusing, and there’s a point where you just want to move on. Right now, I’m quickly approaching the latter.

Magicka is yet another digital download only game. That’s both a bad thing and a good thing in my opinion. The good is you don’t have to go buy a box. The bad, you have to wait for it to download; and if you don’t have steam installed, you have to wait for that to download, and then you have to wait for the damn patches to download.

I WANT TO PLAY MY DAMN GAME ALREADY!!!!!!!

All told I spent 4 hours–yes I’ll admit my internet connection is not as awesome as it should be–waiting for the bloody game to download and install before I could get into playing it.

One final note, Paradox, is apparently not known for releasing stable, well-behaved games at release. Magicka is full of it’s share of glitches and bugs; so much so that it was unplayable for many on release. It’s still buggy, and there are still problems, but Paradox appears to be trying to patch them as quickly as they can. I haven’t run into any game ending bugs, but I have run into a few rather annoying glitches.

So be warned, this game could be a buggy mess, or it may not be.

That said, Steam has just informed me that I’ve played a total of 117 minutes already and I only got the game before going to bed last night/this morning. For $9.99, it’s well on it’s way to being a better buy than a movie ticket IMO.

Buy Magicka from Amazon